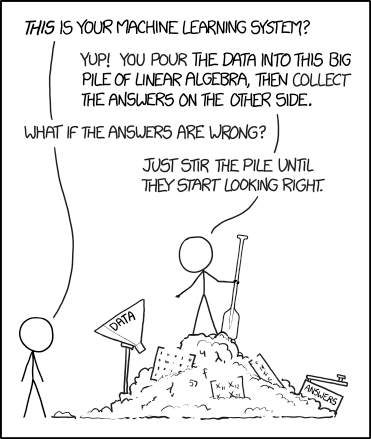

The recent innovations around LLMs and generative AI leads to an interesting question: can machines think?

Source: xkcd #1838 Machine Learning

Source: xkcd #1838 Machine Learning

Defining the ability to “think”

What does it mean to “think”? Decades ago, many argued that thinking was an inherently human ability. Other creatures, whether machines or animals, were not conscious and could not generate thoughts with the same creativity as humans. For instance, machines received explicit instructions from a programmer; they were incapable of the so-called “thinking” we associate with humans.

But, if we define “thought” as a construct specific to humans, we deny machines the opportunity to prove themselves as conscious! Instead, we should examine the key characteristics of human thought that differentiate us from other creatures. What are these characteristics?

I argue that the process of thought matters more than the output of thought. When we see a 7-year old child adding two-digit numbers together on a piece of paper, we see them engaged in thought. Yes, their thought process is almost algorithmic, but they are thinking nonetheless. On the other hand, when we punch numbers into our calculator and press the equals button, we don’t imagine the calculator “thinking” at all. We know that hidden electrical circuits within the calculator are rapidly firing compute our answer.

With that in mind, some key characteristics of human thought are:

- thinking can be both free-form and structured

- thinking is influenced by our past experiences and current environment

- thinking requires a certain level of concentration or brainpower

Now, let’s think back to the calculator. The calculator follows a definitely structured thought process and is incapable of “free-form” thought: there is no randomness! Moreover, the calculator does not “learn” from the past; it’s simply programmed from the very start. So, the calculator cannot think.

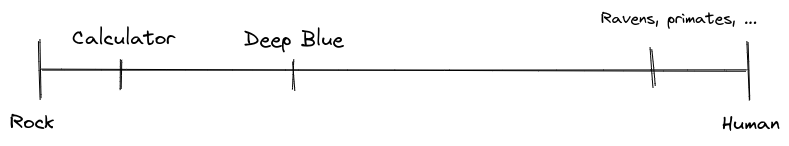

Thoughtfulness is a spectrum

What about a more complex system like Deep Blue? When Deep Blue beat Garry Kasparov in 1996, many considered the victory a turning stone in artificial intelligence. Deep Blue demonstrated that programs could achieve human-level performance in not only physical and robotic tasks, but also creative and intellectual pursuits like chess. Yet, this revolutionary machine was merely a brute-force algorithm supported by vast computational power. By our definitions, Deep Blue is incapable of free-form thought and thus cannot “think” in the traditional sense.

But surely, Deep Blue exhibits more thought capabilities than a calculator? To reconcile this, we can view “thought” as a spectrum. There are inanimate objects like rocks on one end and humans on the other end, with everything else falling in-between. Side note: there may be more “thought”-ful creatures than humans that we haven’t discovered yet!

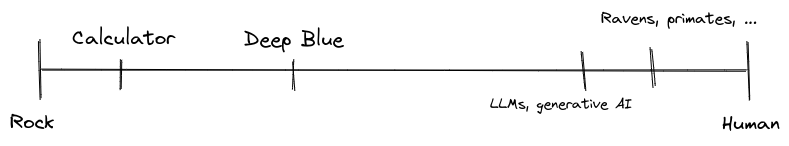

Where do LLMs fall on this spectrum? Going through our criteria:

- thinking can be both free-form and structured. LLMs exhibit free-form thought: they can reason about logic puzzles, hold conversations in 19th century cowboy slang, and write entire essays on esoteric topics. At the same time, LLMs can demonstrate structured thought: techniques such as Chain of Thought capture a human-like reasoning process.

- thinking is influenced by our past experiences and current environment. Our past experiences are analogous to vast amounts of training data, and our current environments are similar to the prompts given to these models.

- thinking requires a certain level of concentration or brainpower. These models use computational power (brainpower) to run at reasonable speeds.

So, I would place LLMs around ravens, certain primates, and other animals that exhibit consciousness.

But, LLMs are nothing more than calculus!

“LLMs are nothing more than calculus and linear algebra!” you shout back. This is one of the main counterarguments I’ve heard: generative AI is fundamentally no different than an algorithm to compute the sum of numbers, or a chess-playing program like Deep Blue. At the core, these models consist of billions of parameters trained on vast amounts of data, and the process of “thought” is simply a sequence of matrix multiplications that combine in unique ways to produce meaning. LLMs are thus clever applications of mathematics and computing, nothing more and nothing less.

But at the end of the day, are we not all just a bunch of molecules that interact in fascinating ways to produce so-called “thought” and “consciousness”? Your eyes moving down the page and reading each word causes billions of neurons to fire, sending short bursts of chemical and electrical signals throughout your body. Is that any different from a node in a neural network propagating numbers and bits of information?

An interesting similarity is that between analogue and digital systems. Analogue systems operate in the physical properties of materials. In contrast, digital systems operate in discrete quantities, such as bits or on/off electrical signals. Our human body is analogue and digital, whereas generative AI exists solely in the digital space. Perhaps this is where the problem arises: we have trouble imagining that anything in the digital paradigm could be capable of thought. More generally, we seem to believe that anything artificial cannot “think.”

OK, so machines can think. What then?

Since the 17th century, when Galileo challenged the belief that the universe revolved around the earth, humans have shifted further away from their perceived role as the center of the universe. The idea that machines can think is another blow to our species' self-importance; it demonstrates that many of our inherent abilities, such as consciousness and meta-thought, are perhaps not specific to us. Moreover, this suggests the possibility of other life-forms as intelligent and capable as humans.

I’m excited to see how LLMs will progress in the coming years and shape our understandings of consciousness and thought.